QwQ-32B

Matching R1 reasoning yet 20x smaller

2025-03-07

QwQ-32B, from Alibaba Qwen team, is a new open-source 32B LLM achieving DeepSeek-R1 level reasoning via scaled Reinforcement Learning. Features a 'thinking mode' for complex tasks.

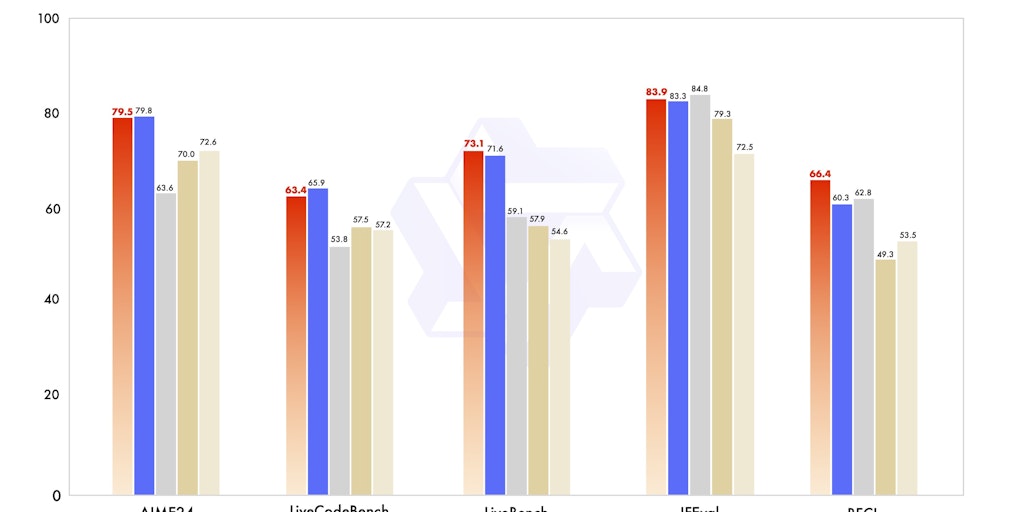

QwQ-32B, developed by Alibaba's Qwen team, is a cutting-edge 32-billion-parameter open-source language model designed for advanced reasoning tasks. Leveraging scaled Reinforcement Learning, it matches the reasoning capabilities of leading models like DeepSeek-R1 while being 20 times smaller. Its unique 'thinking mode' enhances performance on complex problems, making it ideal for tasks requiring deep analysis. Built on the Qwen2.5 architecture, it features advanced components like RoPE, SwiGLU, and RMSNorm, supporting a context length of up to 131,072 tokens. QwQ-32B excels in multi-turn conversations, long-context understanding, and standardized outputs for tasks like math problems and multiple-choice questions. Optimized for frameworks like Hugging Face and vLLM, it offers a balance of efficiency and high performance, making it a powerful tool for developers tackling challenging AI applications.

Open Source

Artificial Intelligence