Qwen2.5-Omni

The end-to-end model powering multimodal chat

2025-03-27

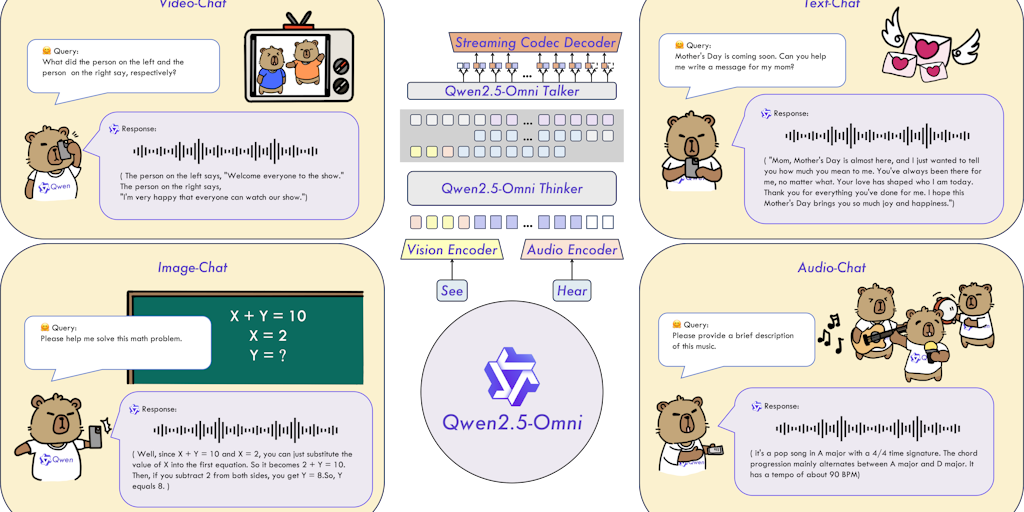

Qwen2.5-Omni is an end-to-end multimodal model by Qwen team at Alibaba Cloud, Understands text, images, audio & video; generates text & natural streaming speech.

Qwen2.5-Omni is Alibaba Cloud's advanced multimodal AI model, capable of processing and generating text, images, audio, and video in real time. Built with the Thinker-Talker architecture, it excels in seamless multimodal interactions, offering superior speech synthesis, robust audio understanding, and high performance across diverse tasks. The model supports real-time voice and video chat, delivering natural streaming responses. It outperforms similar-sized models in benchmarks like speech recognition, translation, and multimodal reasoning. Users can customize voice outputs and deploy it via APIs or web interfaces, making it ideal for dynamic, interactive applications. Available on platforms like Hugging Face and ModelScope, Qwen2.5-Omni is designed for developers seeking a versatile, end-to-end AI solution.

Open Source

Artificial Intelligence

GitHub

Audio