MiMo

Xiaomi's Open Source Model, Born for Reasoning

2025-04-30

Open-source (Apache 2.0) LLM series 'born for reasoning.' Pre-trained & RL-tuned models (like the 7B) match o1-mini on math/code. Base/SFT/RL models released.

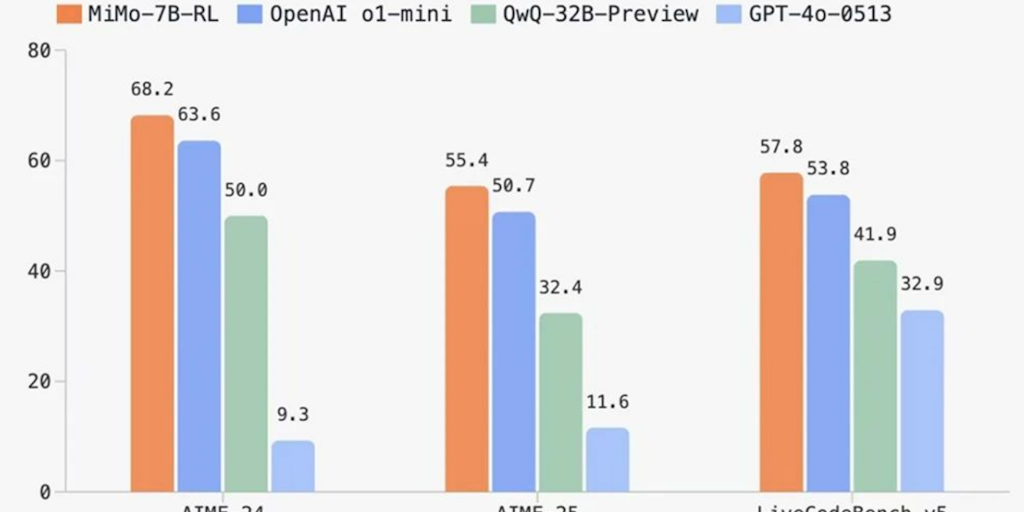

MiMo is an open-source language model series developed by Xiaomi, specifically optimized for reasoning tasks. Licensed under Apache 2.0, it includes pre-trained, supervised fine-tuned (SFT), and reinforcement learning (RL)-tuned models, with the 7B variant matching OpenAI's o1-mini in math and code performance.

The model excels due to its focus on reasoning from pre-training, leveraging enhanced data filtering, synthetic reasoning data, and a three-stage training approach. Post-training employs curated math and code problems with rule-based rewards to refine performance. MiMo also features infrastructure improvements, such as a seamless rollout engine, accelerating RL training.

Available models include the base version, SFT, and RL-tuned variants, all hosted on Hugging Face. MiMo demonstrates that smaller models can achieve reasoning capabilities comparable to larger ones, offering valuable insights for the AI community.

The model excels due to its focus on reasoning from pre-training, leveraging enhanced data filtering, synthetic reasoning data, and a three-stage training approach. Post-training employs curated math and code problems with rule-based rewards to refine performance. MiMo also features infrastructure improvements, such as a seamless rollout engine, accelerating RL training.

Available models include the base version, SFT, and RL-tuned variants, all hosted on Hugging Face. MiMo demonstrates that smaller models can achieve reasoning capabilities comparable to larger ones, offering valuable insights for the AI community.

Open Source

Artificial Intelligence

GitHub