Circuit Tracer

Anthropic's open tools to see how AI thinks

2025-06-01

Anthropic's open-source Circuit Tracer helps researchers understand LLMs by visualizing internal computations as attribution graphs. Explore on Neuronpedia or use the library. Aims for AI transparency.

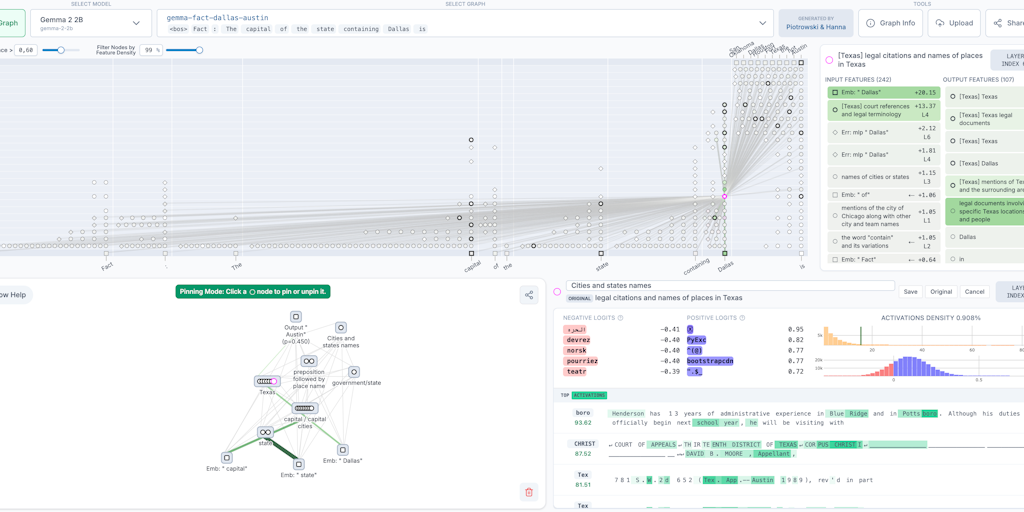

Anthropic's Circuit Tracer is an open-source tool designed to enhance transparency in AI by visualizing the internal computations of large language models (LLMs). It generates attribution graphs, which map the steps a model takes to produce specific outputs, helping researchers analyze decision-making processes. The tool includes a library for generating these graphs on open-weights models and an interactive frontend hosted by Neuronpedia for exploration. Developed through Anthropic's Fellows program, Circuit Tracer enables users to trace, annotate, and test hypotheses by modifying model features. It has already been used to study behaviors like multilingual reasoning in models such as Gemma and Llama. By open-sourcing these tools, Anthropic aims to accelerate interpretability research and foster a deeper understanding of AI systems. The project encourages community collaboration to uncover new insights into model functionality.

Open Source

Artificial Intelligence

GitHub