Janus

Simulation testing for AI agents

2025-06-04

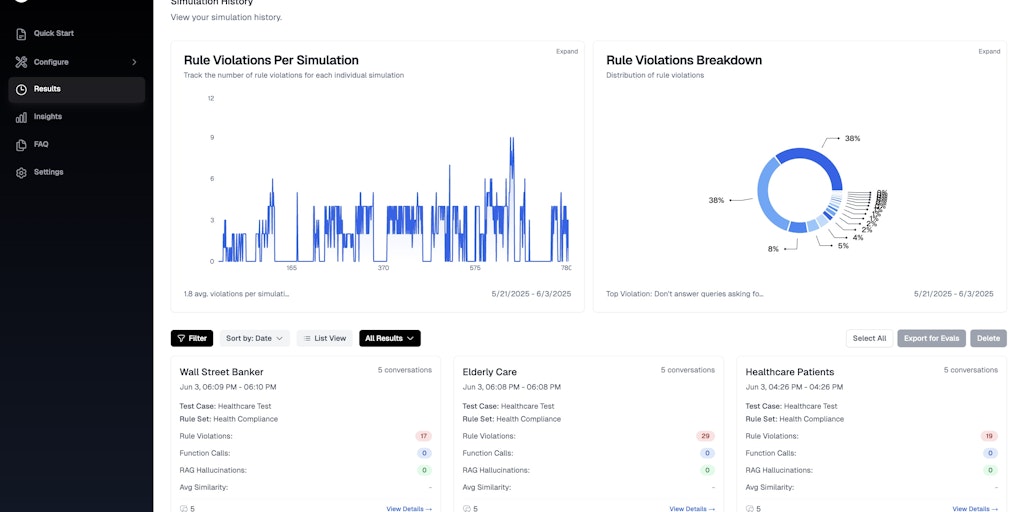

Janus battle-tests your AI agents to surface hallucinations, rule violations, and tool-call/performance failures. We run thousands of AI simulations against your chat/voice agents and offer custom evals for further model improvement.

Janus is a simulation testing platform designed to rigorously evaluate AI agents, ensuring they perform reliably and safely. It runs thousands of simulations to detect hallucinations—instances where agents fabricate content—and monitors rule violations to prevent policy breaks. The tool also identifies failed API or function calls, improving overall reliability. Additionally, Janus audits responses for biased or sensitive content, catching risky behavior before it reaches users. It offers custom evaluations to benchmark agent performance and provides actionable insights for continuous improvement. By stress-testing chat and voice agents, Janus helps developers build more accurate, compliant, and dependable AI systems.

Analytics

Artificial Intelligence

Tech