LangWatch Scenario - Agent Simulations

Agentic testing for agentic codebases

2025-06-26

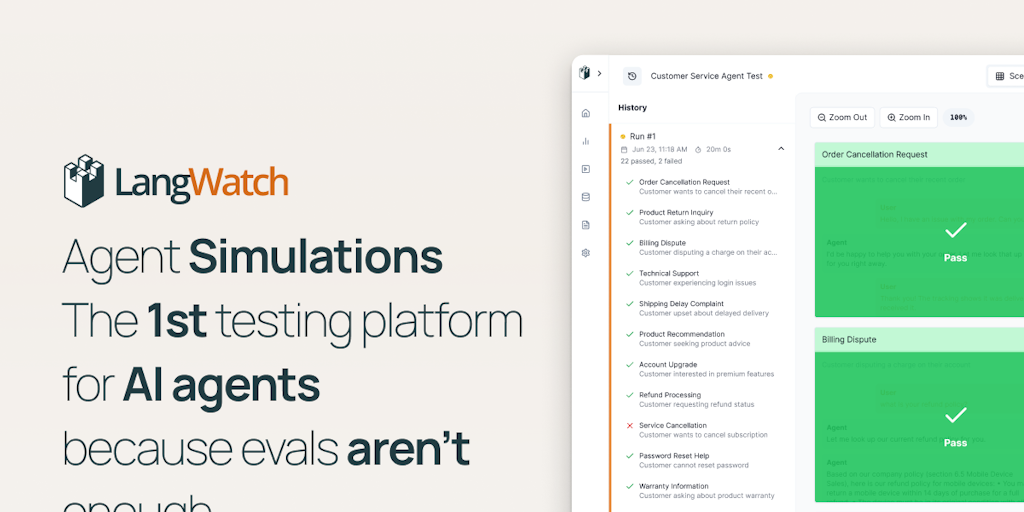

As AI agents grow more complex, reasoning, using tools, and making decisions, traditional evals fall short. LangWatch Scenario simulates real-world interactions to test agent behavior. It’s like unit testing, but for AI agents.

LangWatch Scenario - Agent Simulations

LangWatch Scenario provides advanced testing for AI agents by simulating real-world interactions, ensuring they perform reliably in complex scenarios. Unlike traditional evaluations, it automates realistic user behaviors and edge cases, catching bugs before deployment. The framework integrates with over 10 AI agent frameworks in Python and TypeScript, making it versatile for any LLM application.

Designed for teams, it enables collaboration with domain experts who can annotate and refine agent behavior without coding. Features include version-controlled test suites, regression detection, and detailed failure analysis. LangWatch also offers enterprise-grade observability, debugging, and optimization tools, ensuring compliance and security.

Fully open-source, it supports self-hosting or cloud deployment, with GDPR compliance and role-based access controls. This makes it ideal for production-ready AI deployments at scale.

LangWatch Scenario provides advanced testing for AI agents by simulating real-world interactions, ensuring they perform reliably in complex scenarios. Unlike traditional evaluations, it automates realistic user behaviors and edge cases, catching bugs before deployment. The framework integrates with over 10 AI agent frameworks in Python and TypeScript, making it versatile for any LLM application.

Designed for teams, it enables collaboration with domain experts who can annotate and refine agent behavior without coding. Features include version-controlled test suites, regression detection, and detailed failure analysis. LangWatch also offers enterprise-grade observability, debugging, and optimization tools, ensuring compliance and security.

Fully open-source, it supports self-hosting or cloud deployment, with GDPR compliance and role-based access controls. This makes it ideal for production-ready AI deployments at scale.

Open Source

Artificial Intelligence

Development