Qwen3-235B-A22B-Thinking-2507

Qwen's most advanced reasoning model yet

2025-07-26

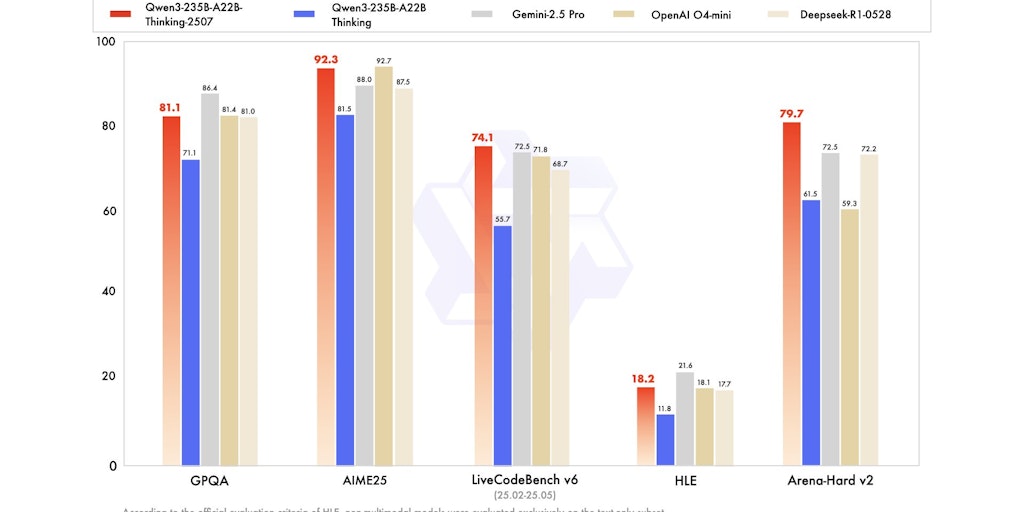

Qwen3-235B-A22B-Thinking-2507 is a powerful open-source MoE model (22B active) built for deep reasoning. It achieves SOTA results on agentic tasks, supports a 256K context, and is available on Hugging Face and via API.

Qwen3-235B-A22B-Thinking-2507 is an advanced open-source Mixture of Experts (MoE) model designed for complex reasoning tasks. With 235 billion total parameters (22B active), it delivers state-of-the-art performance in logical reasoning, mathematics, coding, and agentic tasks. The model supports an extended 256K context window, enabling deeper analysis and more coherent long-form outputs. Key improvements include enhanced instruction-following, tool usage, and text generation aligned with human preferences. Benchmarks show superior results in academic and multilingual tasks compared to leading models. Available via Hugging Face and API, it’s optimized for deployment with frameworks like vLLM and SGLang. Ideal for research and applications requiring high-level reasoning, Qwen3 excels in agentic workflows with integrated tool-calling support. Note: This version operates exclusively in 'thinking mode,' prioritizing detailed reasoning over standard chat responses.

API

Open Source

Artificial Intelligence